请注意,本文编写于 818 天前,最后修改于 814 天前,其中某些信息可能已经过时。

目录

前言

这是一个使用最新版本的slurm(23.02)进行GPU集群部署配置的示例,并进行了初步测试。鉴于slurm官方文档内容的稀缺和不够丰富,使得很多slurm初学者经常在部署这里踩坑。希望本文档能够对他们有所帮助。

1. 环境拓扑图

| addr | hostname | role | slurm version | os-version | gpu type |

|---|---|---|---|---|---|

| 192.168.11.111 | slurmm | master,worker,slurmdbd,slurmrestd | 23.02.3 | 22.04 | NVIDIA GeForce RTX 2080 Ti x2 |

| 192.168.11.112 | slurmn | work | 23.02.3 | 22.04 | NVIDIA GeForce RTX 3060 Ti x1 |

2. 基础配置(所有节点)

2.1 域名一致

- master node

shellroot@slurmm:~# cat /etc/hosts 127.0.0.1 localhost 127.0.1.1 slurmm 192.168.11.111 slurmm 192.168.11.112 slurmn hostname slurmm # 同时修改 /etc/hostname 文件

- worker node

shellroot@slurmn:~# cat /etc/hosts 127.0.0.1 localhost 127.0.1.1 slurmn 192.168.11.111 slurmm 192.168.11.112 slurmn hostname slurmn # 同时修改 /etc/hostname 文件

2.2 创建slurm账号和组

安装slurm的时候,slurm会自动创建slurm用户,但不同节点上创建slurm uid/gid可能不同,所以提前手动创建,需要在所有的节点上创建。

shellexport SLURMUSER=64030 groupadd -g $SLURMUSER slurm useradd -m -c "slurm Uid 'N' Gid Slurm" -d /home/slurm -u $SLURMUSER -g slurm -s /sbin/nologin slurm

2.3 添加slurm 23.02 PPA

shellsudo add-apt-repository ppa:ubuntu-hpc/slurm-wlm-23.02 sudo apt update

2.4 安装 munge

shellexport MUNGEUSER=1888

groupadd -g $MUNGEUSER munge

useradd -m -c "MUNGE Uid 'N' Gid Emporium" -d /var/lib/munge -u $MUNGEUSER -g munge -s /sbin/nologin munge

export DEBIAN_FRONTEND=noninteractive # 禁止弹出交互式页面

apt install munge libmunge-dev libmunge2 -y

systemctl start munge # 启动munge

systemctl enable munge # 开机自启

chown -R munge: /etc/munge/

chmod 400 /etc/munge/munge.key

chown -R munge: /var/lib/munge

chown -R munge: /var/run/munge # 可能不存在

chown -R munge: /var/log/munge

# 测试是否成功运行

root@slurmmaster: munge -n | unmunge | grep STATUS

STATUS: Success (0)

# 生成 munge key

/usr/sbin/mungekey -f

# 多节点集群,将master 节点上的 /etc/munge/munge.key ,复制到所有worker节点(已经安装munge),覆盖。并重启munge. 注意权限

# chmod 400 /etc/munge/munge.key

# chown -R munge: /etc/munge/

# 重启所有节点上的 munge

systemctl restart munge

# 从mungeworker 节点测试到 munge master 节点的连通性

munge -n -t 10 | ssh -p 15654 slurmm unmunge

root@slurmn:/etc/munge# munge -n -t 10 | ssh -p 15654 slurmm unmunge

The authenticity of host '[slurmm]:15654 ([10.211.55.22]:15654)' can't be established.

ED25519 key fingerprint is SHA256:hKrLsmx9/fXNhDfhxFtJ07OG6G/WyTzv013mBOIl5v8.

This key is not known by any other names

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '[slurmm]:15654' (ED25519) to the list of known hosts.

STATUS: Success (0)

ENCODE_HOST: slurmn (10.211.55.9)

ENCODE_TIME: 2023-11-04 09:10:23 +0000 (1699089023)

DECODE_TIME: 2023-11-04 09:10:25 +0000 (1699089025)

TTL: 10

CIPHER: aes128 (4)

MAC: sha256 (5)

ZIP: none (0)

UID: root (0)

GID: root (0)

LENGTH: 0

# 更多munge信息和操作请参考官方文档: https://github.com/dun/munge/wiki/Installation-Guide

2.5 安装显卡驱动

shell# 1. 屏蔽 nouveau 驱动

sudo cat << EOF > /etc/modprobe.d/blacklist-nouveau.conf

blacklist vga16fb

blacklist rivafb

blacklist rivatv

blacklist nvidiafb

blacklist nouveau

options nouveau modeset=0

EOF

update-initramfs -u

reboot

# 2. 安装最新英伟达最新官方驱动

wget https://us.download.nvidia.com/XFree86/Linux-x86_64/535.129.03/NVIDIA-Linux-x86_64-535.129.03.run

chmod +x NVIDIA-Linux-x86_64-535.129.03.run

./NVIDIA-Linux-x86_64-535.129.03.run -q -a --ui=none

# 3. 验证显卡驱动安装成功

root@slurmm:/etc/slurm# nvidia-smi

Sat Nov 25 17:52:53 2023

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.129.03 Driver Version: 535.129.03 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA GeForce RTX 2080 Ti Off | 00000000:03:00.0 Off | N/A |

| 0% 32C P0 55W / 300W | 0MiB / 11264MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 1 NVIDIA GeForce RTX 2080 Ti Off | 00000000:04:00.0 Off | N/A |

| 30% 29C P0 31W / 250W | 0MiB / 11264MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| No running processes found |

+---------------------------------------------------------------------------------------+

3. control节点配置

3.1 安装mysql

shellexport DEBIAN_FRONTEND=noninteractive

apt install mysql-server -y

# 修改root 密码

root@slurmm:/etc/munge# mysql -h localhost -u root

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 8

Server version: 8.0.35-0ubuntu0.22.04.1 (Ubuntu)

Copyright (c) 2000, 2023, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> ALTER USER 'root'@'localhost' IDENTIFIED WITH mysql_native_password BY '123456';

Query OK, 0 rows affected (0.01 sec)

mysql> CREATE USER 'slurm'@'slurmm' IDENTIFIED WITH mysql_native_password BY '123456'; GRANT ALL PRIVILEGES ON *.* TO 'slurm'@'slurmm';

mysql> exit

# 在 /etc/mysql/mysql.conf.d/mysqld.cnf 最下面添加如下几行,否则在

# 日志文件 /var/log/slurm/slurmdbd.log 中会发现如下错误:

# 'error: Database settings not recommended values: innodb_buffer_pool_size innodb_lock_wait_timeout'

bind-address=slurmm # 将 bind-address 改为 slurmm域名

innodb_buffer_pool_size=1024M

innodb_log_file_size=64M

innodb_lock_wait_timeout=900

# 重启mysql

systemctl restart mysql

3.2 安装slurm基础组件和数据库(slurmctld, slurmdbd)

- 安装并重启服务

shellexport DEBIAN_FRONTEND=noninteractive apt install slurm-wlm slurmd slurmctld slurmdbd slurmrestd mailutils libhttp-parser-dev libjson-c-dev libjwt-dev libyaml-dev libpmix-dev

- 配置 /lib/systemd/system/slurmctld.service , 将 slurmdbd.service 添加到 [unit] -> Unit

shell[Unit] Description=Slurm controller daemon After=network-online.target munge.service slurmdbd.service Wants=network-online.target ConditionPathExists=/etc/slurm/slurm.conf Documentation=man:slurmctld(8) [Service] Type=simple EnvironmentFile=-/etc/default/slurmctld ExecStart=/usr/sbin/slurmctld -D -s $SLURMCTLD_OPTIONS ExecReload=/bin/kill -HUP $MAINPID PIDFile=/run/slurmctld.pid LimitNOFILE=65536 TasksMax=infinity [Install] WantedBy=multi-user.target

- 配置 /etc/slurm/slurm.conf, 注意 compute 节点部分已经包含了gpu信息(gres)

shell# slurm.conf file generated by configurator.html.

# Put this file on all nodes of your cluster.

# See the slurm.conf man page for more information.

#

ClusterName=slurmCluster

SlurmctldHost=slurmm

#SlurmctldHost=

#

#DisableRootJobs=NO

#EnforcePartLimits=NO

#Epilog=

#EpilogSlurmctld=

#FirstJobId=1

#MaxJobId=67043328

#GroupUpdateForce=0

#GroupUpdateTime=600

#JobFileAppend=0

#JobRequeue=1

#JobSubmitPlugins=lua

#KillOnBadExit=0

#LaunchType=launch/slurm

#Licenses=foo*4,bar

#MailProg=/usr/bin/mail

#MaxJobCount=10000

#MaxStepCount=40000

#MaxTasksPerNode=512

MpiDefault=none

#MpiParams=ports=#-#

#PluginDir=

#PlugStackConfig=

#PrivateData=jobs

ProctrackType=proctrack/cgroup

#Prolog=

#PrologFlags=

#PrologSlurmctld=

#PropagatePrioProcess=0

#PropagateResourceLimits=

#PropagateResourceLimitsExcept=

#RebootProgram=

ReturnToService=2

SlurmctldPidFile=/run/slurmctld.pid

SlurmctldPort=6817

SlurmdPidFile=/run/slurmd.pid

SlurmdPort=6818

SlurmdSpoolDir=/var/lib/slurm/slurmd

SlurmUser=root

#SrunEpilog=

#SrunProlog=

StateSaveLocation=/var/lib/slurm/slurmctld

SwitchType=switch/none

#TaskEpilog=

TaskPlugin=task/affinity,task/cgroup

#TaskProlog=

#TopologyPlugin=topology/tree

#TmpFS=/tmp

#TrackWCKey=no

#TreeWidth=

#UnkillableStepProgram=

#UsePAM=0

#

#

# TIMERS

#BatchStartTimeout=10

#CompleteWait=0

#EpilogMsgTime=2000

#GetEnvTimeout=2

#HealthCheckInterval=0

#HealthCheckProgram=

InactiveLimit=0

KillWait=30

#MessageTimeout=10

#ResvOverRun=0

MinJobAge=300

#OverTimeLimit=0

SlurmctldTimeout=120

SlurmdTimeout=300

#UnkillableStepTimeout=60

#VSizeFactor=0

Waittime=0

#

#

# SCHEDULING

#DefMemPerCPU=0

#MaxMemPerCPU=0

#SchedulerTimeSlice=30

SchedulerType=sched/backfill

SelectType=select/cons_tres

#

#

# JOB PRIORITY

#PriorityFlags=

#PriorityType=priority/basic

#PriorityDecayHalfLife=

#PriorityCalcPeriod=

#PriorityFavorSmall=

#PriorityMaxAge=

#PriorityUsageResetPeriod=

#PriorityWeightAge=

#PriorityWeightFairshare=

#PriorityWeightJobSize=

#PriorityWeightPartition=

#PriorityWeightQOS=

#

#

# LOGGING AND ACCOUNTING

#AccountingStorageEnforce=0

AccountingStorageHost=slurmm

#AccountingStoragePass=

AccountingStoragePort=6819

AccountingStorageType=accounting_storage/slurmdbd

AccountingStorageTRES=gres/gpu,gres/gpu:NVIDIA_GeForce_RTX_2080_Ti,gres/gpu:NVIDIA_GeForce_RTX_3060_Ti

#AccountingStorageUser=

#AccountingStoreFlags=

JobCompHost=slurmm

JobCompLoc=/var/log/slurm/slurm_jobcomp.log

#JobCompParams=

JobCompPass=123456

JobCompPort=3306

JobCompType=jobcomp/mysql

JobCompUser=slurm

#JobContainerType=job_container/none

JobAcctGatherFrequency=30

JobAcctGatherType=jobacct_gather/cgroup

SlurmctldDebug=info

SlurmctldLogFile=/var/log/slurm/slurmctld.log

SlurmdDebug=info

SlurmdLogFile=/var/log/slurm/slurmd.log

#SlurmSchedLogFile=

#SlurmSchedLogLevel=

#DebugFlags=

#

#

# POWER SAVE SUPPORT FOR IDLE NODES (optional)

#SuspendProgram=

#ResumeProgram=

#SuspendTimeout=

#ResumeTimeout=

#ResumeRate=

#SuspendExcNodes=

#SuspendExcParts=

#SuspendRate=

#SuspendTime=

#

#

# slurm configless

SlurmctldParameters=enable_configless

#

#

# JWT

AuthAltTypes=auth/jwt

AuthAltParameters=jwt_key=/etc/slurm/jwt_hs256.key

#

#

# PartitionName

PartitionName=debug Nodes=ALL Default=YES MaxTime=INFINITE State=UP

#

#

# GresTypes

GresTypes=gpu

#

#

# COMPUTE NODES

NodeName=slurmm CPUs=36 Boards=1 SocketsPerBoard=1 CoresPerSocket=18 ThreadsPerCore=2 RealMemory=31921 State=UNKNOWN Gres=gpu:NVIDIA_GeForce_RTX_2080_Ti:2

NodeName=slurmn CPUs=36 Boards=1 SocketsPerBoard=1 CoresPerSocket=18 ThreadsPerCore=2 RealMemory=15819 State=UNKNOWN Gres=gpu:NVIDIA_GeForce_RTX_3060_Ti:1

- 配置 gres.conf, 在 /etc/slurm 下创建该文件

shell##################################################################

# Slurm's Generic Resource (GRES) configuration file

#

##################################################################

NodeName=slurmm Name=gpu File=/dev/nvidia[0-1] Type=NVIDIA_GeForce_RTX_2080_Ti

NodeName=slurmn Name=gpu File=/dev/nvidia0 Type=NVIDIA_GeForce_RTX_3060_Ti

- 配置 cgroup.conf, 在 /etc/slurm 下创建该文件

shellCgroupAutomount=yes ConstrainCores=yes ConstrainRAMSpace=yes

- 配置 slumdbd (/etc/slurm/slurmdbd.conf)

shell# Authentication info 一些munge的认证信息

AuthType=auth/munge

AuthInfo=/var/run/munge/munge.socket.2

# DebugLevel=info

# slurmrestd jwt auth

AuthAltTypes=auth/jwt

AuthAltParameters=jwt_key=/etc/slurm/jwt_hs256.key

# slurmDBD info slurmdbd相关的配置信息

DbdHost=slurmm

DbdPort=6819

SlurmUser=root

DebugLevel=verbose

LogFile=/var/log/slurm/slurmdbd.log

# Database info 连接mysql的相关信息

StorageType=accounting_storage/mysql

StorageHost=slurmm

StoragePort=3306

StoragePass=123456

StorageUser=slurm

StorageLoc=slurm_acct_db

3.3 slurmmrestd 配置

- 生成 slurm restapi jwt key

shelldd if=/dev/random of=/etc/slurm/jwt_hs256.key bs=32 count=1

chmod 0600 /etc/slurm/jwt_hs256.key

chown slurm: /etc/slurm/jwt_hs256.key

# 生成 jwt key,首先需要确保 /etc/slurm/slurm.conf文件中的这两个参数已经设置

#AuthAltTypes=auth/jwt

#AuthAltParameters=jwt_key=/etc/slurm/jwt_hs256.key

root@slurmm:/etc/slurm# scontrol token username=root lifespan=2099999999

SLURM_JWT=eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjI5MTUzMzY4ODMsImlhdCI6MTY5OTU4NDY5Miwic3VuIjoibXVuZ2UifQ.tgsigBrZTbRqOlM_W-lMRqFQqd4EOU7l25m4aEZy-rc

# 生成的 token 会在后面使用

- 修改 /lib/systemd/system/slurmrestd.service 文件,添加 SLURM_JWT ,User ,StandardError, StandardOutput. 修改 ExecStart 为tcp 访问模式

shell[Unit]

Description=Slurm REST daemon

After=network-online.target munge.service slurmctld.service

Wants=network-online.target

ConditionPathExists=/etc/slurm/slurm.conf

Documentation=man:slurmrestd(8)

[Service]

Type=simple

EnvironmentFile=-/etc/default/slurmrestd

# Default to local auth via socket

# ExecStart=/usr/sbin/slurmrestd $SLURMRESTD_OPTIONS unix:/run/slurmrestd.socket

# Uncomment to enable listening mode

#Environment="SLURM_JWT=daemon"

Environment="SLURM_JWT=eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjI5MTUzMzY4ODMsImlhdCI6MTY5OTU4NDY5Miwic3VuIjoibXVuZ2UifQ.tgsigBrZTbRqOlM_W-lMRqFQqd4EOU7l25m4aEZy-rc"

ExecStart=/usr/sbin/slurmrestd $SLURMRESTD_OPTIONS 0.0.0.0:6820

ExecReload=/bin/kill -HUP $MAINPID

User=munge

StandardError=append:/var/log/slurm/slurmrestd.log

StandardOutput=append:/var/log/slurm/slurmrestd.log

[Install]

WantedBy=multi-user.target

3.4 重启相关服务并设置开机自启

shellsystemctl daemon-reload systemctl start slurmctld systemctl start slurmdbd systemctl start slurmrestd systemctl enable slurmctld # 开机自启 systemctl enable slurmdbd # 开机自启 systemctl enable slurmrestd # 开机自启

4. worker节点配置

4.1 安装基础组件(slurmd)

shellexport DEBIAN_FRONTEND=noninteractive

apt install slurmd mailutils libhttp-parser-dev libjson-c-dev libjwt-dev libyaml-dev libpmix-dev

systemctl start slurmd

systemctl enable slurmd # 开机自启

# 目前的 worker节点部署都是 configless 模式,即不需要创建 worker 节点的 /etc/slurm/slurm.conf

# worker 节点的slurmd 会自动去 slurmctld 节点拉取slurm.conf 文件,会拉取到 work节点的

# /run/slurm/conf 目录下

- 配置 slurmd 无配置访问 (/lib/systemd/system/slurmd.service)

- ExecStart 添加 --conf-server slurmm:6817 选项

- 注释掉 ConditionPathExists=/etc/slurm/slurm.conf

- 注意 'ExecStartPre=-nvidia-smi' 不是必须的,但我加上的原因,留给请读者自己在思考。

- 注意'StartLimitInterval=500,StartLimitBurst=15,Restart=always,RestartSec=30' 也不是必须的,原因也留给读者自己思考。

shell[Unit]

Description=Slurm node daemon

After=munge.service network-online.target remote-fs.target

Wants=network-online.target

#ConditionPathExists=/etc/slurm/slurm.conf

Documentation=man:slurmd(8)

StartLimitInterval=500

StartLimitBurst=15

[Service]

Type=simple

EnvironmentFile=-/etc/default/slurmd

ExecStartPre=-nvidia-smi

ExecStart=/usr/sbin/slurmd --conf-server slurmm:6817 -D -s $SLURMD_OPTIONS

ExecReload=/bin/kill -HUP $MAINPID

PIDFile=/run/slurmd.pid

KillMode=process

LimitNOFILE=131072

LimitMEMLOCK=infinity

LimitSTACK=infinity

Delegate=yes

TasksMax=infinity

Restart=always

RestartSec=30

[Install]

WantedBy=multi-user.target

- 重启 slurmd

shellsystemctl daemon-reload systemctl restart slurmd systemctl enable slurmd

5. slurm集群状态检测(scontrol show node xx)

- worker1节点

shellroot@slurmm:~# scontrol show node slurmm NodeName=slurmm Arch=x86_64 CoresPerSocket=18 CPUAlloc=0 CPUEfctv=36 CPUTot=36 CPULoad=0.00 AvailableFeatures=(null) ActiveFeatures=(null) Gres=gpu:NVIDIA_GeForce_RTX_2080_Ti:2 NodeAddr=slurmm NodeHostName=slurmm Version=23.02.3 OS=Linux 5.15.0-89-generic #99-Ubuntu SMP Mon Oct 30 20:42:41 UTC 2023 RealMemory=31921 AllocMem=0 FreeMem=28177 Sockets=1 Boards=1 State=IDLE ThreadsPerCore=2 TmpDisk=0 Weight=1 Owner=N/A MCS_label=N/A Partitions=debug BootTime=2023-11-26T17:28:43 SlurmdStartTime=2023-11-26T17:29:08 LastBusyTime=2023-11-26T17:36:03 ResumeAfterTime=None CfgTRES=cpu=36,mem=31921M,billing=36 AllocTRES= CapWatts=n/a CurrentWatts=0 AveWatts=0 ExtSensorsJoules=n/s ExtSensorsWatts=0 ExtSensorsTemp=n/s

- worker2节点

shellroot@slurmm:~# scontrol show node slurmn NodeName=slurmn Arch=x86_64 CoresPerSocket=18 CPUAlloc=0 CPUEfctv=36 CPUTot=36 CPULoad=0.00 AvailableFeatures=(null) ActiveFeatures=(null) Gres=gpu:NVIDIA_GeForce_RTX_3060_Ti:1 NodeAddr=slurmn NodeHostName=slurmn Version=23.02.3 OS=Linux 5.15.0-89-generic #99-Ubuntu SMP Mon Oct 30 20:42:41 UTC 2023 RealMemory=15819 AllocMem=0 FreeMem=14790 Sockets=1 Boards=1 State=IDLE ThreadsPerCore=2 TmpDisk=0 Weight=1 Owner=N/A MCS_label=N/A Partitions=debug BootTime=2023-11-26T17:28:05 SlurmdStartTime=2023-11-26T17:29:20 LastBusyTime=2023-11-26T17:29:20 ResumeAfterTime=None CfgTRES=cpu=36,mem=15819M,billing=36 AllocTRES= CapWatts=n/a CurrentWatts=0 AveWatts=0 ExtSensorsJoules=n/s ExtSensorsWatts=0 ExtSensorsTemp=n/s

6. 使用slurmrestd 提交任务

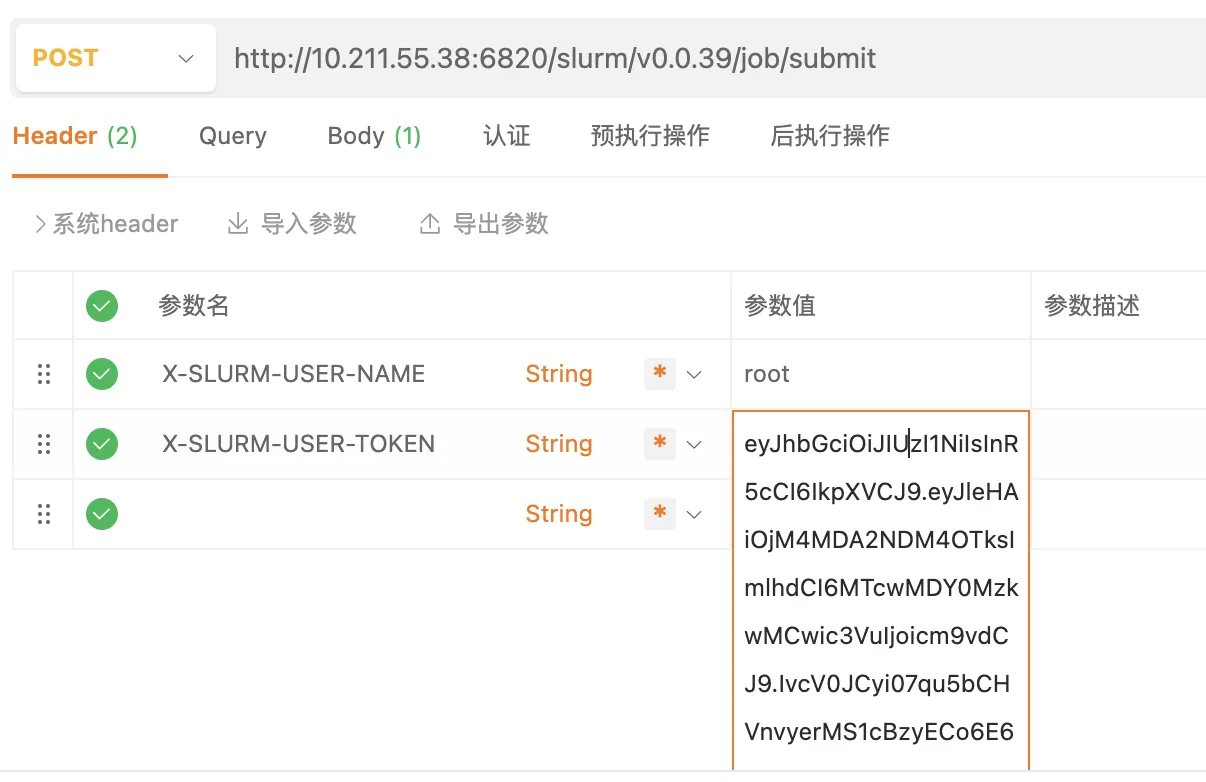

6.1 apipost准备

- 本示例使用apipost工具进行rest测试: https://www.apipost.cn/

- 添加header: X-SLURM-USER-NAME (root) 和 X-SLURM-USER-TOKEN(前面生成的SLURM_JWT key)

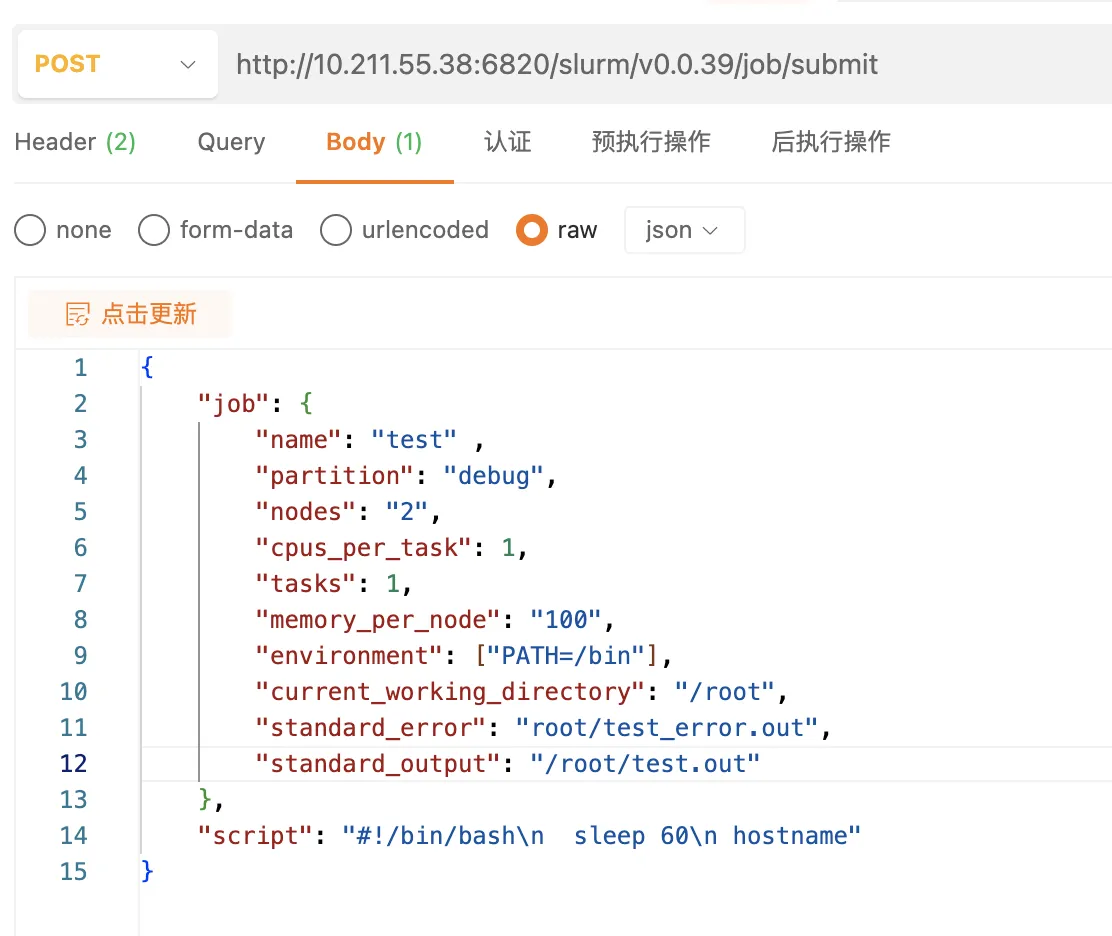

6.2 测试CPU任务示例

- 添加测试cpu任务body内容(raw json)

- 执行任务返回结果如下:

shell{ "meta": { "plugin": { "type": "openapi\/v0.0.39", "name": "Slurm OpenAPI v0.0.39", "data_parser": "v0.0.39" }, "client": { "source": "[10.211.55.2]:53550" }, "Slurm": { "version": { "major": 23, "micro": 3, "minor": 2 }, "release": "23.02.3" } }, "errors": [ ], "warnings": [ ], "result": { "job_id": 2, "step_id": "batch", "error_code": 0, "error": "No error", "job_submit_user_msg": "" }, "job_id": 2, "step_id": "batch", "job_submit_user_msg": "" }

- 完整的 shell格式请求如下:

shellcurl --request POST \ --url http://10.211.55.38:6820/slurm/v0.0.39/job/submit \ --header 'X-SLURM-USER-NAME: root' \ --header 'X-SLURM-USER-TOKEN: eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjM4MDA2NDM4OTksImlhdCI6MTcwMDY0MzkwMCwic3VuIjoicm9vdCJ9.IvcV0JCyi07qu5bCHVnvyerMS1cBzyECo6E68otFrsM' \ --header 'content-type: application/json' \ --data '{ "job": { "name": "test" , "partition": "debug", "nodes": "2", "cpus_per_task": 1, "tasks": 1, "memory_per_node": "100", "environment": ["PATH=/bin"], "current_working_directory": "/root", "standard_error": "root/test_error.out", "standard_output": "/root/test.out" }, "script": "#!/bin/bash\n sleep 60\n hostname" }'

6.3 测试GPU任务示例

6.3.1 一个 TensorFlow 示例

- 在测试节点上安装 tensorflow 相关库

shellpython3 -m pip install tensorflow[and-cuda] -i https://pypi.tuna.tsinghua.edu.cn/simple some-package

- 在测试节点上创建 测试脚本: /root/test_gpu.py

shellimport tensorflow as tf

import time

# Check if GPU is available

print("Is GPU available: ", tf.test.is_gpu_available())

# Set the size of the matrices

matrix_size = 20000

# Create random matrices

matrix1 = tf.random.normal([matrix_size, matrix_size], mean=0, stddev=1)

matrix2 = tf.random.normal([matrix_size, matrix_size], mean=0, stddev=1)

# Create a graph for matrix multiplication

@tf.function

def matrix_multiply(a, b):

return tf.matmul(a, b)

# Start time

start_time = time.time()

# Force the operation to run on GPU

with tf.device('/GPU:0'):

result = matrix_multiply(matrix1, matrix2)

# Make sure the computation is complete

print(result.numpy()) # This line will also force the graph to execute

# End time

end_time = time.time()

# Calculate and print the total time taken

total_time = end_time - start_time

print("Total time taken on GPU: {:.2f} seconds".format(total_time))

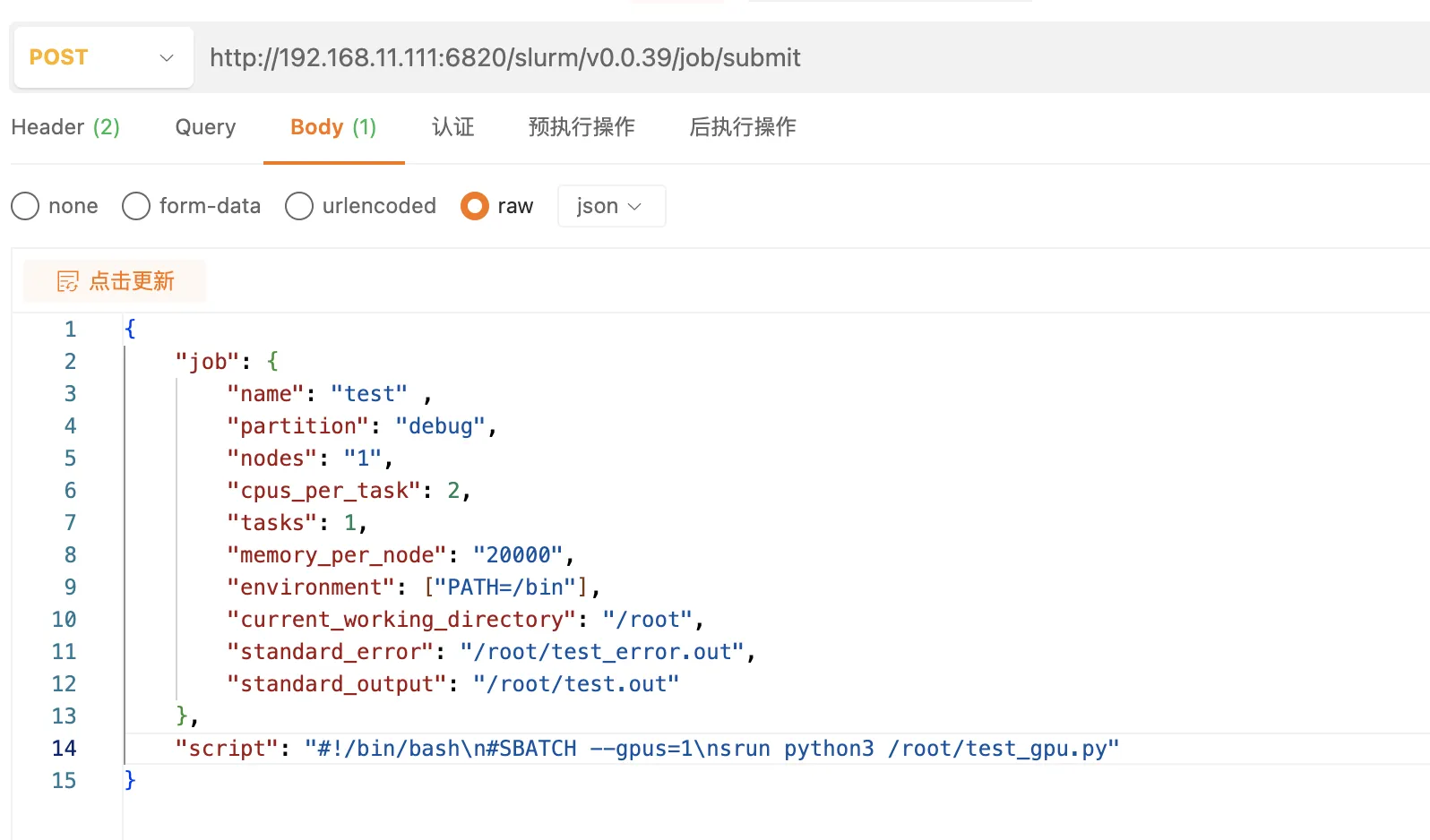

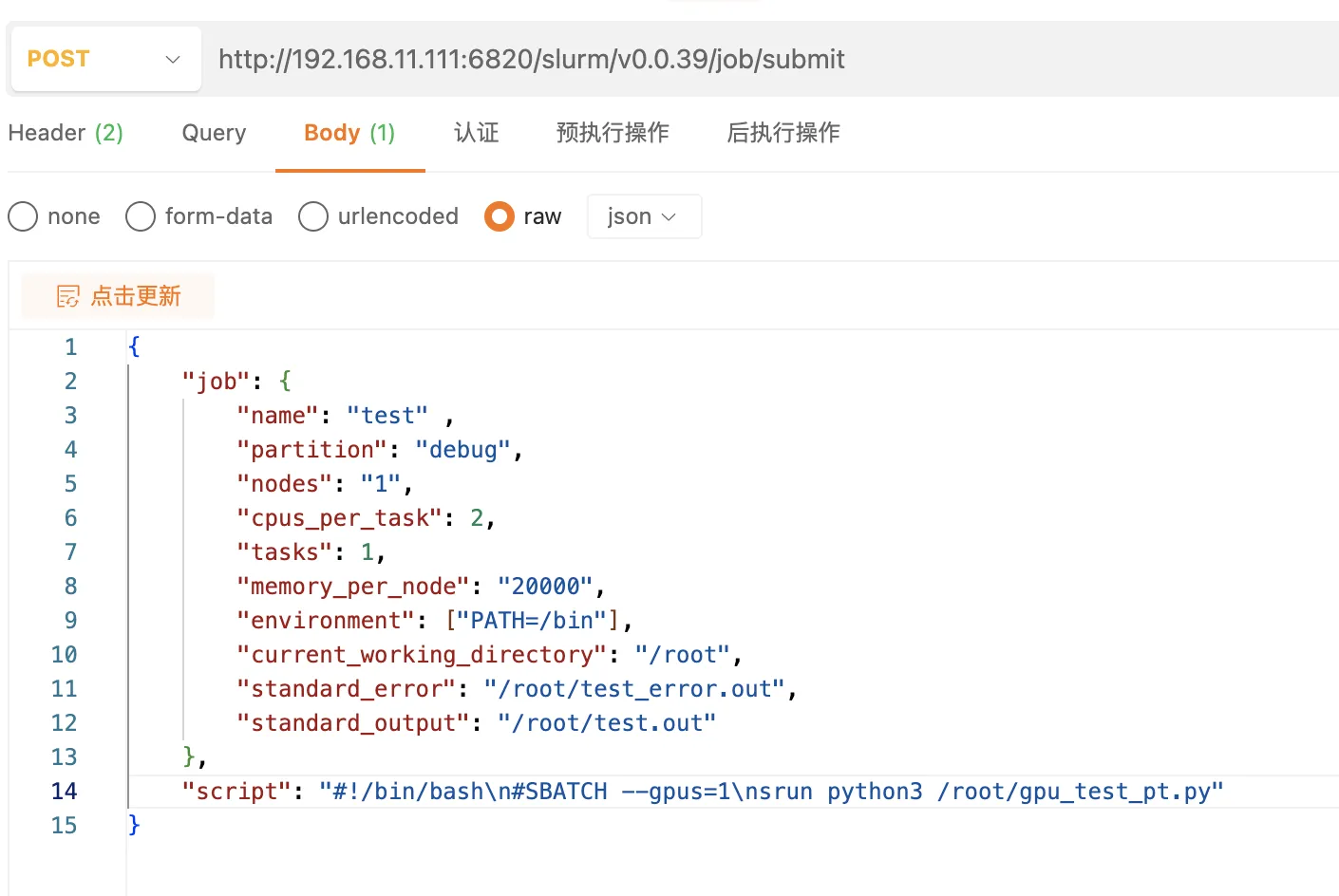

- 通过 apipost 运行测试任务

- body内容如下:

shell{ "job": { "name": "test" , "partition": "debug", "nodes": "1", "cpus_per_task": 2, "tasks": 1, "memory_per_node": "20000", "environment": ["PATH=/bin"], "current_working_directory": "/root", "standard_error": "/root/test_error.out", "standard_output": "/root/test.out" }, "script": "#!/bin/bash\n#SBATCH --gpus=1\nsrun python3 /root/test_gpu.py" }

- 完整请求对应的shell命令如下:

shellcurl --request POST \ --url http://192.168.11.111:6820/slurm/v0.0.39/job/submit \ --header 'X-SLURM-USER-NAME: root' \ --header 'X-SLURM-USER-TOKEN: eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjM4MDA5MDQzOTgsImlhdCI6MTcwMDkwNDM5OSwic3VuIjoicm9vdCJ9.X1NKkfWOw6EBuqYkMJE6Tt82Wg7V7OXIPqIT7ULrZro' \ --header 'content-type: application/json' \ --data '{ "job": { "name": "test" , "partition": "debug", "nodes": "1", "cpus_per_task": 2, "tasks": 1, "memory_per_node": "20000", "environment": ["PATH=/bin"], "current_working_directory": "/root", "standard_error": "/root/test_error.out", "standard_output": "/root/test.out" }, "script": "#!/bin/bash\n#SBATCH --gpus=1\nsrun python3 /root/test_gpu.py" }'

- 我看可以看到在任务运行过程中gpu的使用情况

shellroot@slurmm:~# nvidia-smi Sat Nov 25 19:55:32 2023 +---------------------------------------------------------------------------------------+ | NVIDIA-SMI 535.129.03 Driver Version: 535.129.03 CUDA Version: 12.2 | |-----------------------------------------+----------------------+----------------------+ | GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |=========================================+======================+======================| | 0 NVIDIA GeForce RTX 2080 Ti Off | 00000000:03:00.0 Off | N/A | | 0% 36C P2 74W / 300W | 9975MiB / 11264MiB | 43% Default | | | | N/A | +-----------------------------------------+----------------------+----------------------+ | 1 NVIDIA GeForce RTX 2080 Ti Off | 00000000:04:00.0 Off | N/A | | 0% 33C P2 62W / 250W | 157MiB / 11264MiB | 0% Default | | | | N/A | +-----------------------------------------+----------------------+----------------------+ +---------------------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |=======================================================================================| | 0 N/A N/A 15829 C /bin/python3 9972MiB | | 1 N/A N/A 15829 C /bin/python3 154MiB | +---------------------------------------------------------------------------------------+

- 脚本最终输出如下:

shellroot@slurmm:~# cat test.out Is GPU available: True [[ 59.486763 -362.00967 204.07918 ... 189.27332 19.941751 34.25493 ] [ 55.790306 -81.3623 188.55533 ... 244.43982 16.179585 -78.40474 ] [ 38.568657 -71.326645 -67.5823 ... -19.634632 -18.1874 5.91805 ] ... [-231.95425 -69.604866 -89.70132 ... -146.36386 143.33617 38.38358 ] [-175.92284 95.84435 -136.62596 ... 13.935053 41.47327 -70.68144 ] [ 178.22328 17.50257 -105.80156 ... -63.366543 46.260273 162.085 ]] Total time taken on GPU: 2.43 seconds

6.3.2 一个 pytorch 示例

- 在测试节点上安装 torch相关的库

shellpip install torchvision -i https://pypi.tuna.tsinghua.edu.cn/simple some-package

- 在测试节点上创建测试脚本: /root/gpu_test_pt.py

shellimport torch

import time

# 检查GPU是否可用,并设置为使用GPU

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print("Using device:", device)

# 设置矩阵的大小

matrix_size = 20000 # 根据你的GPU能力调整这个值

# 创建随机矩阵并将它们移动到GPU

matrix1 = torch.randn(matrix_size, matrix_size, device=device)

matrix2 = torch.randn(matrix_size, matrix_size, device=device)

# 开始计时

start_time = time.time()

# 执行多次矩阵乘法

result = matrix1

for _ in range(5): # 调整循环次数以增加复杂性

result = torch.matmul(result, matrix2)

# 确保计算完成(这里将结果移动到CPU仅用于打印)

result_cpu = result.cpu().numpy()

print(result_cpu)

# 结束计时

end_time = time.time()

# 计算并打印所用的总时间

total_time = end_time - start_time

print("Total time taken on GPU: {:.2f} seconds".format(total_time))

- 通过 apipost 运行测试脚本

- body 内容如下:

shell{ "job": { "name": "test" , "partition": "debug", "nodes": "1", "cpus_per_task": 2, "tasks": 1, "memory_per_node": "20000", "environment": ["PATH=/bin"], "current_working_directory": "/root", "standard_error": "/root/test_error.out", "standard_output": "/root/test.out" }, "script": "#!/bin/bash\n#SBATCH --gpus=1\nsrun python3 /root/gpu_test_pt.py" }

- 完整的shell请求命令如下:

shellcurl --request POST \ --url http://192.168.11.111:6820/slurm/v0.0.39/job/submit \ --header 'X-SLURM-USER-NAME: root' \ --header 'X-SLURM-USER-TOKEN: eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjM4MDA5MDQzOTgsImlhdCI6MTcwMDkwNDM5OSwic3VuIjoicm9vdCJ9.X1NKkfWOw6EBuqYkMJE6Tt82Wg7V7OXIPqIT7ULrZro' \ --header 'content-type: application/json' \ --data '{ "job": { "name": "test" , "partition": "debug", "nodes": "1", "cpus_per_task": 2, "tasks": 1, "memory_per_node": "20000", "environment": ["PATH=/bin"], "current_working_directory": "/root", "standard_error": "/root/test_error.out", "standard_output": "/root/test.out" }, "script": "#!/bin/bash\n#SBATCH --gpus=1\nsrun python3 /root/gpu_test_pt.py" }'

- 可以看到在任务运行过程中gpu使用情况如下:

shellroot@slurmm:~# nvidia-smi Sat Nov 25 20:18:48 2023 +---------------------------------------------------------------------------------------+ | NVIDIA-SMI 535.129.03 Driver Version: 535.129.03 CUDA Version: 12.2 | |-----------------------------------------+----------------------+----------------------+ | GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |=========================================+======================+======================| | 0 NVIDIA GeForce RTX 2080 Ti Off | 00000000:03:00.0 Off | N/A | | 0% 42C P2 290W / 300W | 6293MiB / 11264MiB | 100% Default | | | | N/A | +-----------------------------------------+----------------------+----------------------+ | 1 NVIDIA GeForce RTX 2080 Ti Off | 00000000:04:00.0 Off | N/A | | 0% 31C P0 62W / 250W | 3MiB / 11264MiB | 1% Default | | | | N/A | +-----------------------------------------+----------------------+----------------------+ +---------------------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |=======================================================================================| | 0 N/A N/A 16353 C /bin/python3 6290MiB | +---------------------------------------------------------------------------------------+

- 任务输出如下:

shellroot@slurmm:~# cat test.out Using device: cuda [[ 6.3689298e+10 -2.9476262e+10 -1.4370834e+10 ... -1.4405234e+10 -8.7143227e+10 -6.6037182e+10] [-3.6845511e+10 3.0736091e+10 2.1430974e+10 ... 2.3678956e+10 7.0674145e+10 -1.5263285e+10] [ 2.5857253e+10 8.9785426e+10 7.0330409e+10 ... 8.3582935e+10 4.4033827e+10 -3.9315169e+10] ... [-1.3963832e+10 5.1874226e+10 -3.2410958e+10 ... -4.3518575e+10 -9.9068609e+10 -2.3638583e+10] [ 3.1754074e+09 -9.9762483e+09 -5.2393665e+10 ... 5.3489369e+10 9.0028827e+10 3.2337533e+10] [ 4.9150353e+10 5.9068326e+10 9.5366513e+10 ... 3.0448054e+10 2.3199865e+10 4.2562814e+10]] Total time taken on GPU: 7.89 seconds

7. slurm 知识点汇总(持续更新中)

7.1 slurm.conf 中的 node configuration 更新需要重启 slurmctld 和 slurmd service

7.2 每一行 的node信息,只有 NodeName是必填的,其他的是可选的

7.3 所有实际资源量小于标称的节点会被设置'DOWN',避免进行任务调度

7.4 关键字段解析: https://www.cnblogs.com/liu-shaobo/p/16213528.html

- Sockets:节点上物理chips/sockets的数量

- CoresPerSocket: 实际cpu插槽上的核心数

- ThreadsPerCore: 单个cpu核心的逻辑线程数目

- CPUS: 逻辑cpu数目

- RealMemory: 以M为单位 的内存数目

- State: 节点状态,默认UNKNOWN

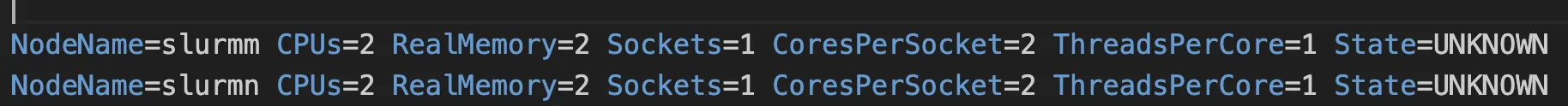

7.5 slurmd -C 可以打印每个计算节点上的 4中的字段信息,可以把输入填入 sllurm.conf 文件中

shellroot@slurmm:~# slurmd -C NodeName=slurmm CPUs=2 Boards=1 SocketsPerBoard=1 CoresPerSocket=2 ThreadsPerCore=1 RealMemory=1956 UpTime=0-01:46:12

7.6 静默模式安装英伟达驱动

shell./NVIDIA-Linux-x86_64-535.129.03.run -q -a --ui=none

如果对你有用的话,可以打赏哦

打赏

本文作者:王海生

本文链接:

版权声明:本博客所有文章除特别声明外,均采用 MIT 许可协议。转载请注明出处!

目录